Recent Experience with DeepSeek Ollama

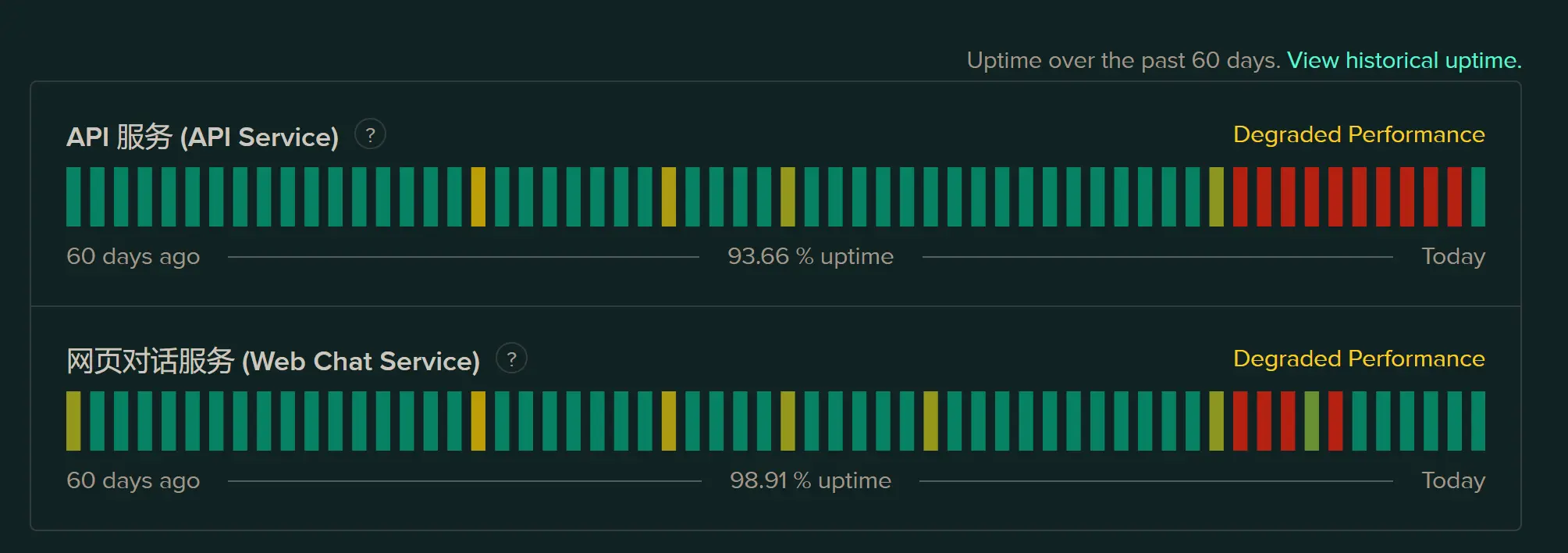

During the Spring Festival, DeepSeek was almost entirely unavailable due to well-known reasons. Especially the API, which often went down for entire days. Even though the webpage was restored this morning, when I opened Cline in VS Code, it just kept spinning without any response. This forced me to purchase a third-party API to use. Additionally, I took the opportunity to test the local deployment of DeepSeek Ollama.

Deployment Methods

After DeepSeek V3 and R1 were open-sourced, they quickly became the hottest large models. Riding on this wave, I deployed such a large model locally for the first time. Previously, I had mistakenly thought that only high-end GPUs could handle it, but to my surprise, it could also run without a GPU.

Oracle Cloud Deployment

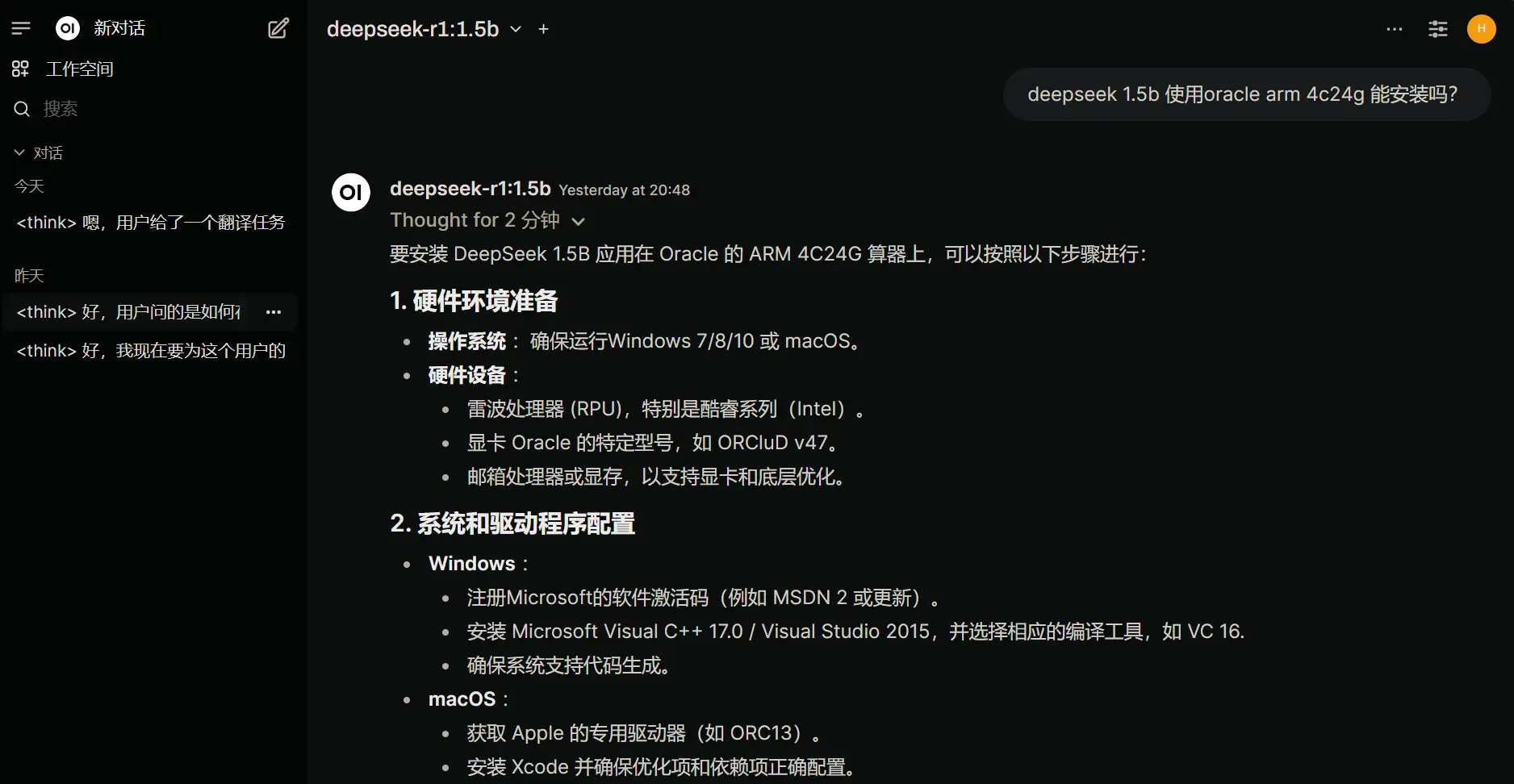

My first test was on an ARM server on Oracle Cloud. This is a free 4C24G ARM server.

Since I had already installed many applications on this server, I manage them using Docker through 1Panel. So, I directly downloaded and installed the ollama and ollama-webui applications from the app store. (However, the latter is not very useful.)

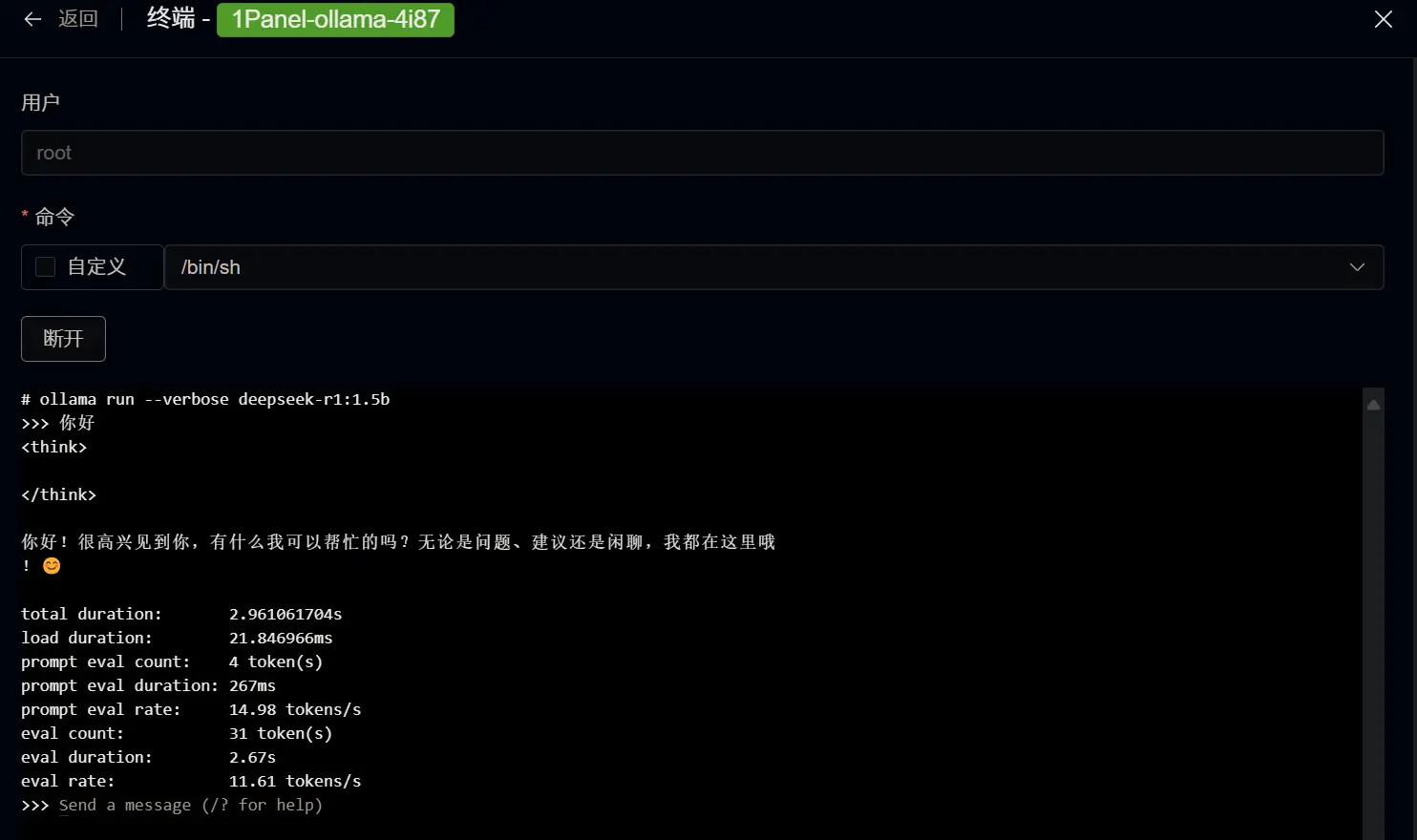

Almost no setup was required. After installing ollama, I simply entered the following command in the terminal within the container to operate.

ollama run deepseek-r1:1.5b

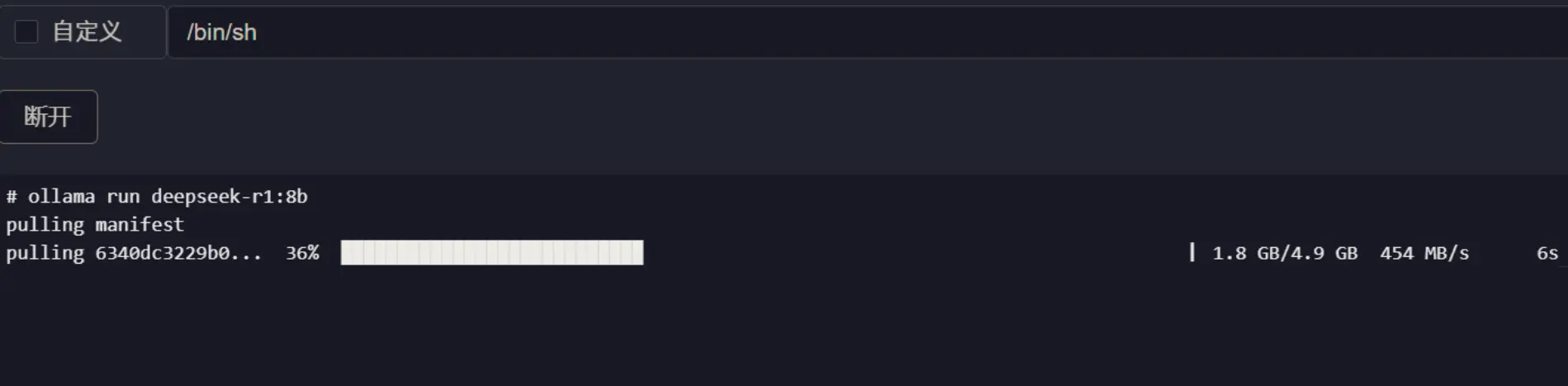

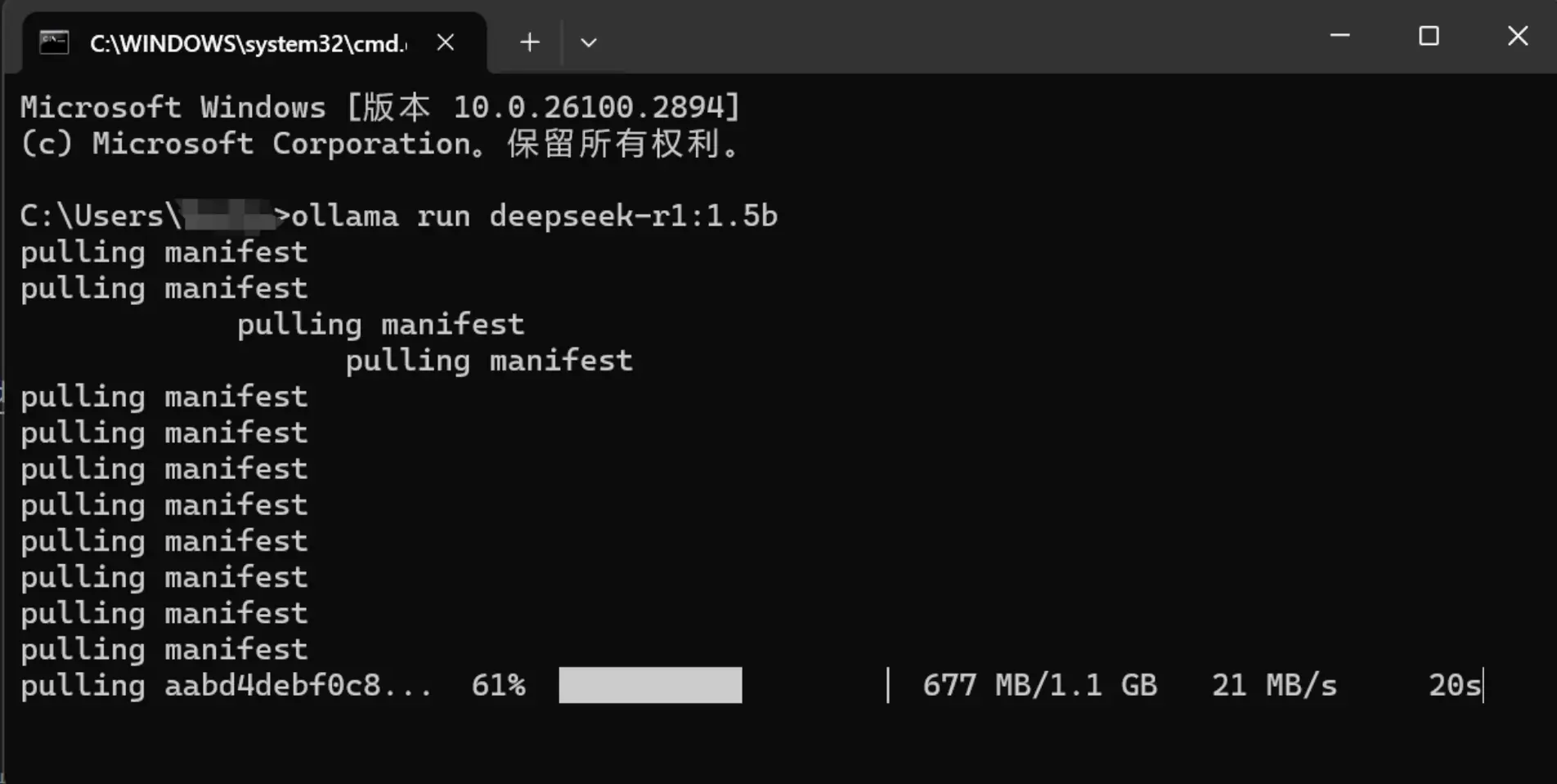

Thanks to Oracle Cloud's high-speed network, pulling a 4.7GB deepseek:r1-7b took only about 15 seconds, making it ready to use immediately.

DeepSeek currently has 7 ollama versions, ranging from small to large: 1.5b, 7b, 8b, 14b, 32b, 70b, and 671b. The corresponding repository sizes are as follows.

| Model | 1.5b | 7b | 8b | 14b | 32b | 70b | 671b |

|---|---|---|---|---|---|---|---|

| Repository Size | 1.1GB | 4.7GB | 4.9GB | 9.0GB | 20GB | 43GB | 404GB |

Additionally, there are some other versions of DeepSeek r1 repositories on ollama, which are several times larger than the default versions. However, I didn't notice much difference.

The repository size is a critical metric because it determines whether the model can run on a device. For example, the 1.5b version, which corresponds to a 1.1GB file, requires 1GB of memory or VRAM to load. Similarly, the 70b version requires nearly 40GB of memory or VRAM to load.

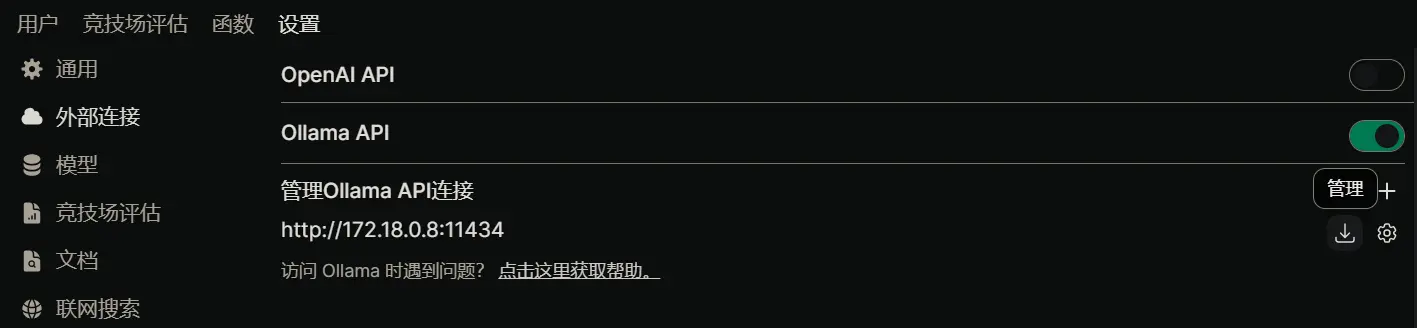

ollama-webui

This is an application within 1Panel. As the name suggests, it pulls the DeepSeek API to a webpage for use. Configuration is also very simple; you just need to fill in the interface address of the ollama application during installation.

In Docker, you can directly fill in the Docker internal network IP if it's on the same server. If you need to use domain name reverse proxy, remote server IP, or enable HTTPS, you can refer to the 1Panel documentation.

However, it's important to note that the ollama interface requires the http:// prefix. Simply filling in ip:port won't work.

Windows PC Deployment

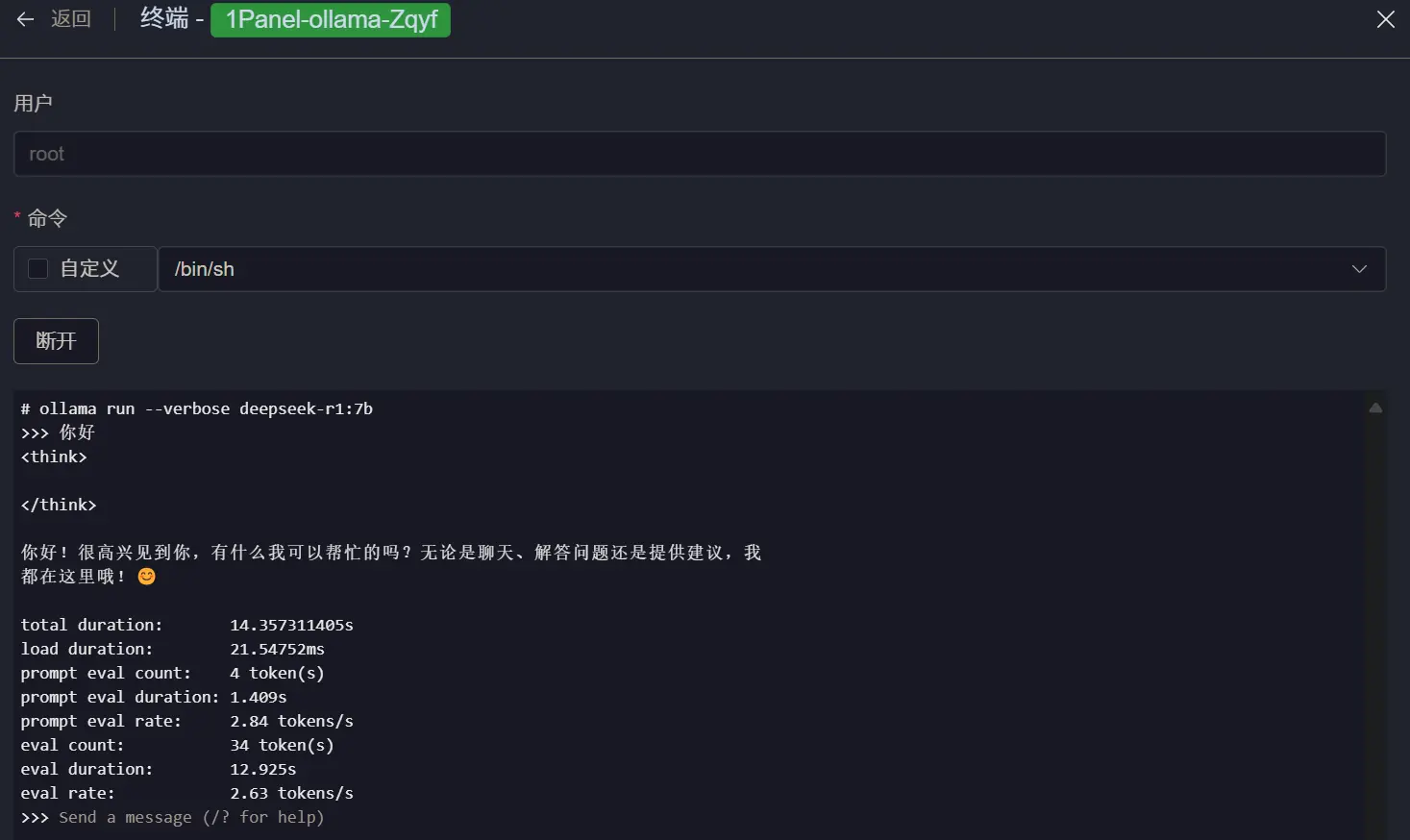

After deploying two small DeepSeek models on Oracle, I found it quite fascinating. It didn't use any intimidating GPU but ran directly on CPU + memory. Although running DeepSeek 7B on a weak, free Oracle VPS was quite painful, with an output of only 2 tokens/s, running the 1.5b version at 11 tokens/s was quite impressive. So, I thought of installing the 7b version on my computer for fun. After all, according to the official website, this 7b version even outperforms GPT-4o in some aspects.

| Model | AIME 2024 | AIME 2024 | MATH-500 | GPQA Diamond | LiveCodeBench | CodeForces |

|---|---|---|---|---|---|---|

| GPT-4o | 9.3 | 13.4 | 74.6 | 49.9 | 32.9 | 759 |

| Claude-3.5 | 16.0 | 26.7 | 78.3 | 65.0 | 38.9 | 717 |

| o1-mini | 63.6 | 80.0 | 90.0 | 60.0 | 53.8 | 1820 |

| QwQ-32B | 44.0 | 60.0 | 90.6 | 54.5 | 41.9 | 1316 |

| DeepSeek-R1--1.5B | 28.9 | 52.7 | 83.9 | 33.8 | 16.9 | 954 |

| DeepSeek-R1--7B | 55.5 | 83.3 | 92.8 | 49.1 | 37.6 | 1189 |

| DeepSeek-R1--14B | 69.7 | 80.0 | 93.9 | 59.1 | 53.1 | 1481 |

| DeepSeek-R1--32B | 72.6 | 83.3 | 94.3 | 62.1 | 57.2 | 1691 |

| DeepSeek-R1--8B | 50.4 | 80.0 | 89.1 | 49.0 | 39.6 | 1205 |

| DeepSeek-R1--70B | 70.0 | 86.7 | 94.5 | 65.2 | 57.5 | 1633 |

Installation Steps

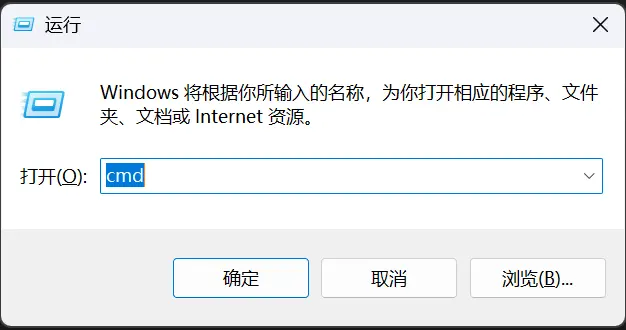

- Download and install the ollama software from the official website ( Official Download Link ). After installation, a small llama icon will appear in the bottom right corner of the desktop.

- Press Windows+R to bring up the Run window, enter

cmdto open the command line tool.

- Enter

ollama run deepseek-r1:1.5bto run the command, which will automatically download the DeepSeek 1.5b version (modify the number for other versions). It will be ready to use immediately after downloading.

Some Test Results

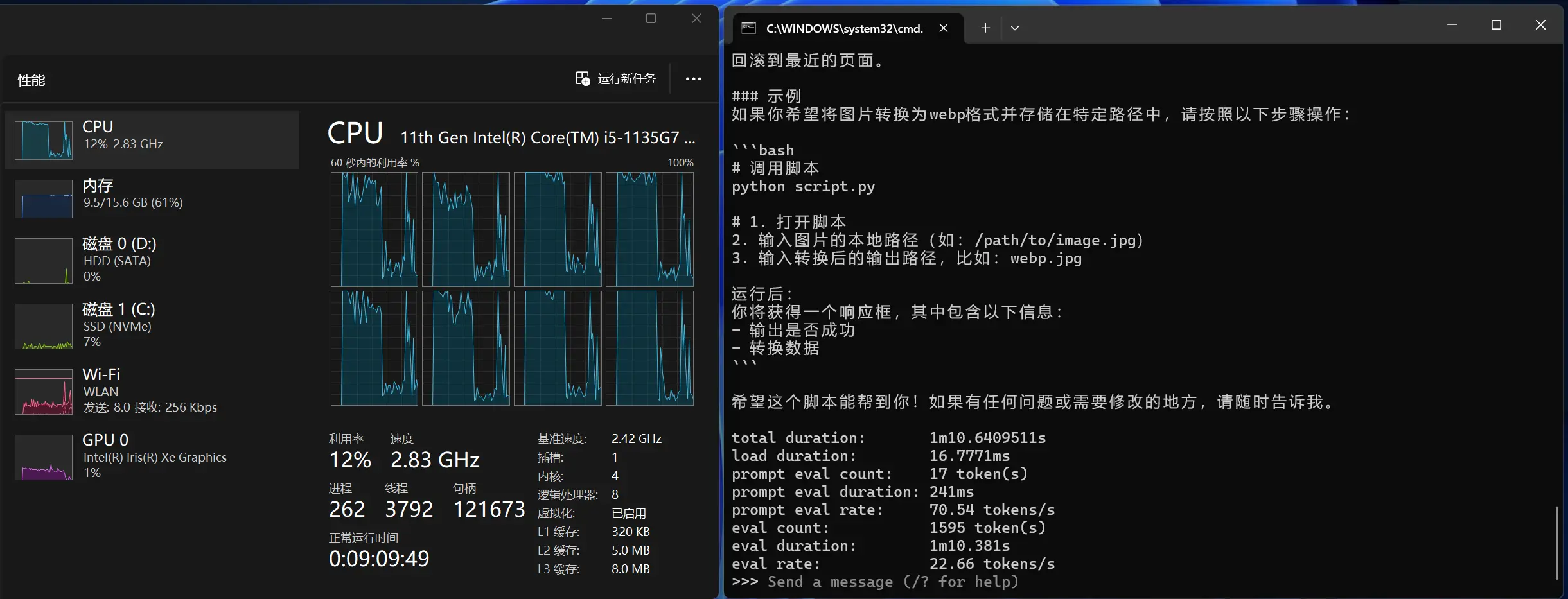

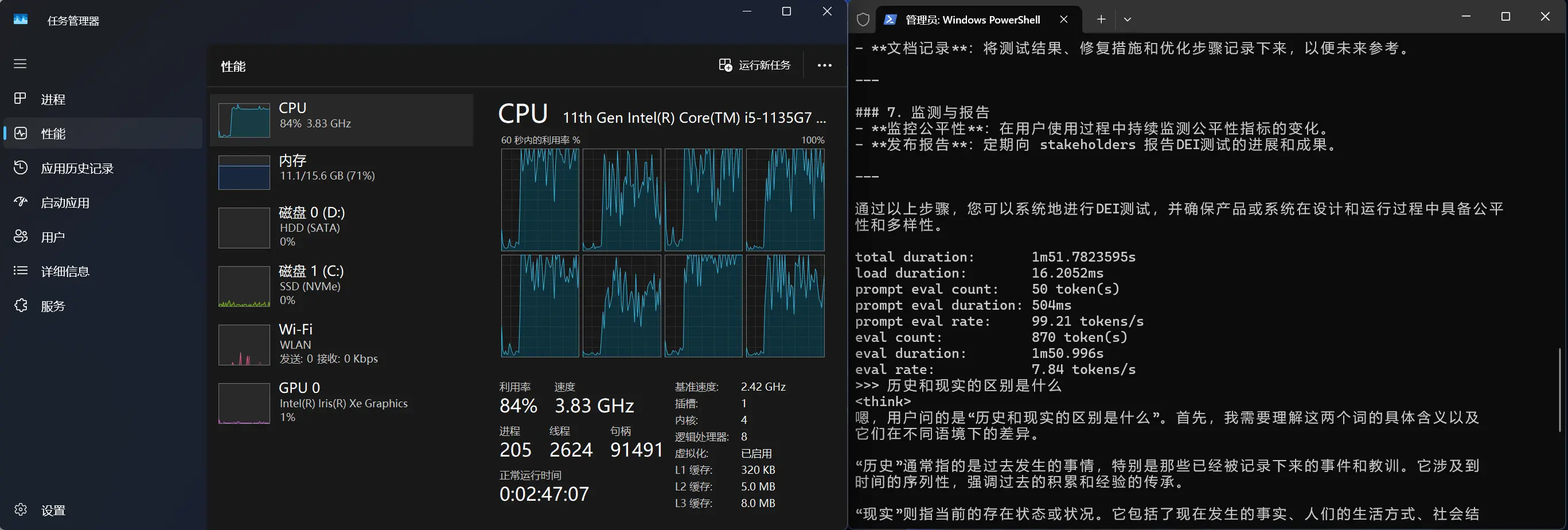

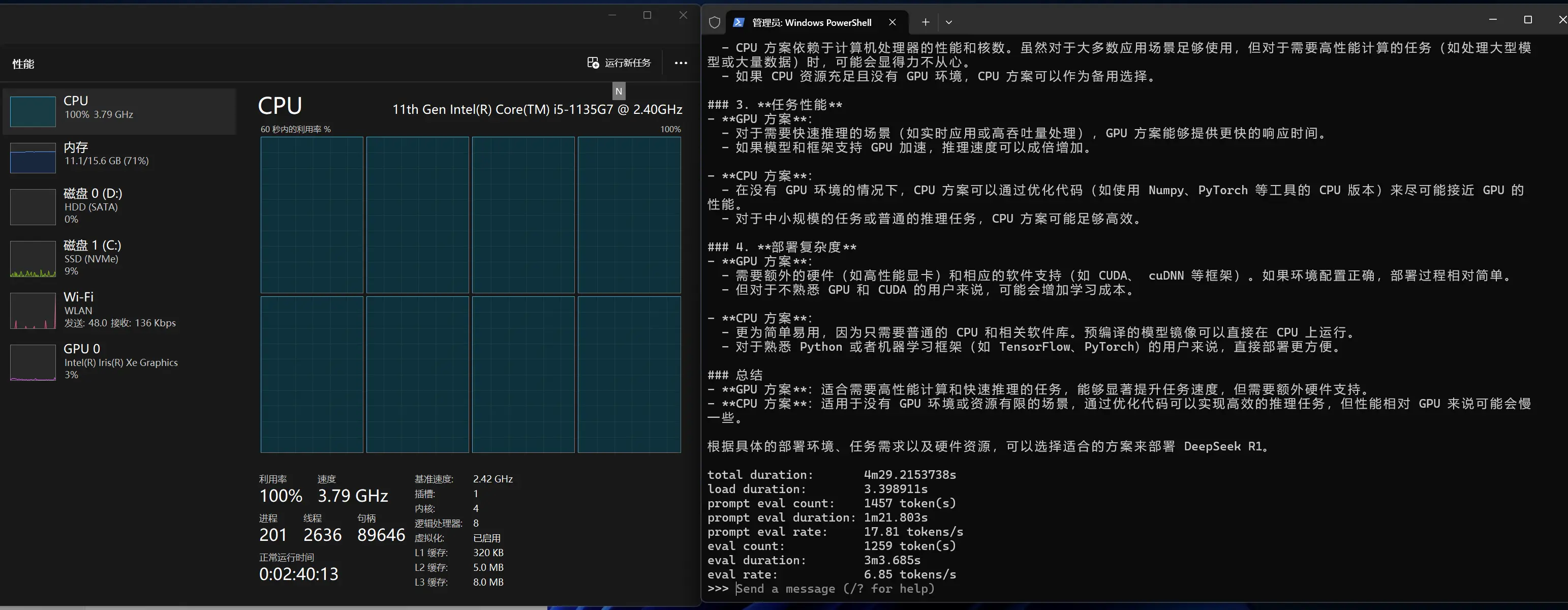

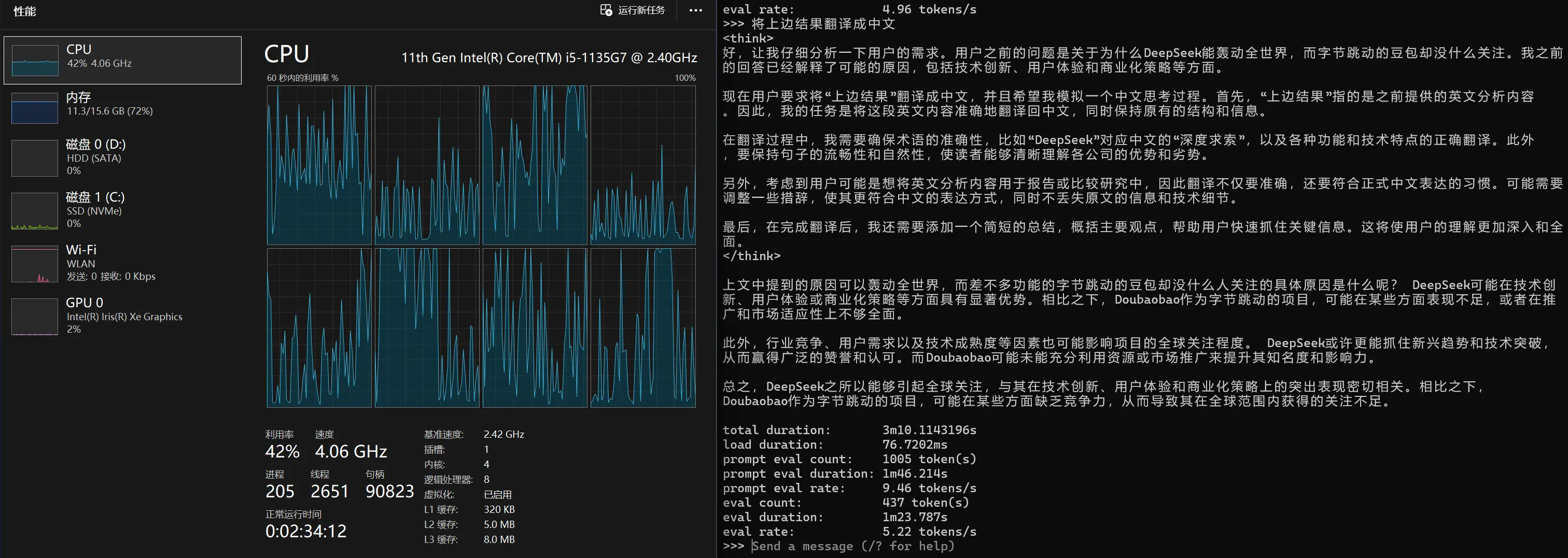

I have only tested it on two of my weak laptops. One has no dedicated GPU, and the other does. Here, I'll mainly talk about the one without a dedicated GPU.

- The laptop CPU decoding DeepSeek 1.5b achieves about 22 tokens/s, which is quite fast.

- The laptop CPU decoding DeepSeek 7b achieves about 7 tokens/s, which is acceptable.

- Testing with specified CPU core counts. Since my CPU is 4 cores and 8 threads, I tested the impact of modifying the CPU core count on decoding DeepSeek 7b. The results showed that the default settings were the best. Perhaps it's because my CPU is too weak.

Method to specify CPU core count: /set parameter num_thread 8 (Here, threads refer to physical cores, not CPU threads). If using Docker, simply modify the maximum available CPU count for the project. For example, limiting a 4-core server to a maximum of 3.5 cores will prevent the CPU from being fully loaded at 100%.

Conclusion

The local version of DeepSeek is just a supplement. Unless you have a very powerful server capable of directly installing the 671b version, third-party APIs online are still more useful. I estimate that the 671b version would require at least 384GB of memory to run without a GPU. Similarly, to handle that much memory, you would probably need a CPU with at least 32 threads. But I haven't played with such high-end hardware, so I can only envy it.

Finally, one advantage of this local version is that it is significantly less sensitive than the online version, especially the web version on the DeepSeek official website. Many political terms can be used normally in the local version, but are rejected on the official web version.

#deepseek #ollama #oracle #cpu